Our latest work on container management was recently accepted to IEEE TPDS:

LANDLORD: Coordinating Dynamic Software Environments to Reduce Container Sprawl

his paper is the result of a continuing collaboration between the CCL at Notre Dame and the Parsl group at University of Chicago, led by Dr. Kyle Chard. Recent PhD grad Tim Shaffer led this work as part of a DOE Computational Science Graduate Fellowship, and current PhD student Thanh Phung joined the project and helped us to view the problem from a clustering perspective.

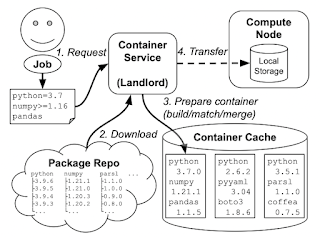

The problem is this: a variety of online services (like Binder, FuncX, and others) generate container images from software environment specifications, like a list of Conda packages. These images are used to execute code on clusters: sometimes long-running code like simulations, but also sometimes short bits of code, maybe even a single Python function call. If every user of the system asks for a slightly different software environment, then the system will quickly run out of space from those large container environments. ("container sprawl") So, we need some entity to manage the space and decide what containers to generate from packages, and which ones to delete:

We observe that multiple requests might be satisfied by the same container. For example, these three jobs all express some constraints on packages A and B. (And one of those jobs doesn't care about the version at all. If we figure out the overlap between those requests, we can just use a single image to satisfy all three:

Going further, we can view this as an incremental online clustering problem. At any given moment, there are a certain number of containers instantiated. If a request matches one already, that's a hit and we just use it. Otherwise that's a miss and we have two choices: either insert a brand new container image that matches the request exactly, or merge an existing container with some new packages in order to satisfy the request. We decide what to do based on a single parameter alpha, which is a measure of distance between the request and the existing container.

Ok, so now how do we pick alpha? We evaluated this by running the Landlord algorithm through traces of software requests from high energy physics workloads and a public trace of binder requests.

There are two extremes to avoid: if alpha is very small, then we end up with a small number of very large containers. This is good for managing the shared cache, but bad because we have to keep moving very large containers out to the compute nodes. (And they may not fit!). On the other hand, if alpha is very large, then we end up with a large number of small containers. This results in a lot of duplication of packages, so the shared cache fills up faster, but the images are small and easy to move around.

As in most computer systems, there isn't one single number that is the answer: rather, it is a tradeoff between desiderata. Here, we have a broad sweet spot around alpha=0.8. But the good news is that the system has a broad "operational zone" in which the tradeoff is productive.

Sound interesting? Check out the full paper:

- Tim Shaffer, Thanh Son Phung, Kyle Chard, and Douglas Thain, LANDLORD: Coordinating Dynamic Software Environments to Reduce Container Sprawl, IEEE Transactions on Parallel and Distributed Systems, to appear in 2023. DOI: 10.1109/TPDS.2023.3241598

No comments:

Post a Comment