|

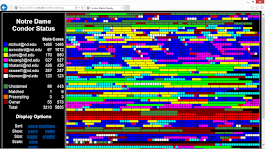

HTCondor Cluster View

|

In the

CCL, we study the design and implementation of scalable systems and applications that run on very large computing systems. It is not unusual for us to encounter an application that runs well on a few nodes, but causes trouble when running on thousands of nodes. This happened recently with a simulation written in Julia that was using

HTCondor to run millions of tasks running on several thousands nodes on our

campus cluster. It ran fine on one node, but when deployed to thousand nodes, this simulation would cause a total meltdown of the shared filesystem, even though its I/O needs were relatively small. What was going on?

Here is what we found:

The Julia programming language uses a just-in-time compiler to generate efficient machine code before execution. Julia organizes code in modules, and user applications in projects, where a project is a list of modules. By default, the compilation step is performed every single time an application is executed and considers all the modules listed in the given project. If an end user sets up an application in the normal way, the result is that the code will be compiled simultaneously on all nodes of the system!

Internally, Julia checks the project's list of modules, checks for files with a modification time more recent than the machine code already available, and if needed, generates new machine code. As usual, the modifications times are done using the stat() system call. To give some perspective, the simulation used a dozen standard Julia modules, resulting in 12,000 stat() calls even when no recompilation was needed. But the number of open() calls to needed files was less than 10. In particular, the file that listed the modules in the project (Project.toml) had close to 2,000 stat() calls, but only one open() call. For comparison, the number of calls to open() and stat() for data files particular to the application was less than 5.

When executed in a single machine on a local file system, even a few thousand system calls may unnoticed by the user. However, they become a big problem when trying to run at scale in a cluster where all nodes share a common networked filesystem. If one thousand nodes start at once, the shared filesystem must field twelve million stat() operations just to determine that nothing has changed. Thus, the scale at which the simulation can run will be limited by factors hidden to the end user, that is, not by the cores, memory, or disk available, but by these file system operations that become expensive when moving from a local to a shared setting.

Once the problem is understood, the workaround is to pre-compile a binary image with the needed modules that then is shipped together with each task. This reduced the number of stat() calls from the original 12,000 to about 200 per invocation. This is image is shipped compressed with each job, to reduce its size from 250MB to 50MB, and decompressed just before the task start execution. Generating the binary image takes about 5 minutes, prior to job submission.

The user application made the generation of the binary image much easier because all the dependencies were listed in a single file. As an example, consider this file that simply lists some modules:

# my_modules.jl

using Pkg

using Random

using Distributions

using DataFrames

using DataStructures

using StatsBase

using LinearAlgebra

If we count the number system calls that involve filenames, we get:

$ strace -f -e trace=%%file julia my_modules.jl |& grep -E '(stat|open)'| wc -l

5106

These calls will be repeated everytime the program runs. Using the module PackageCompiler we can generate a Julia system image as follows:

# comp.jl

# run as: julia comp.jl

loaded_by_julia = filter((x) -> typeof(eval(x)) <: Module && x ≠ :Main, names(Main,imported=true));

include("my_modules.jl")

loaded_all = filter((x) -> typeof(eval(x)) <: Module && x ≠ :Main, names(Main,imported=true));

loaded_by_ch = setdiff(loaded_all, loaded_by_julia);

println("Creating system image with:");

println(loaded_by_ch);

using PackageCompiler;

create_sysimage(loaded_by_ch; sysimage_path="sysimage.so", cpu_target="generic")

Using the image, the number of file releated calls, and there the stress on the

share file system, are greatly reduced:

$ strace -f -e trace=%file julia -Jsysimage my_modules.jl |& grep -E '(stat|open)' | wc -l

353

Also, as expected, the overhead per run also decreases, as the runtime decreases from about 10s to about 0.5s, which is significant for short running tasks.

So what's the moral of the story?

1 - When moving from a single node to a distributed system, operations that were previously cheap may become more expensive. You can't fix what you can't measure, so use tools like strace to understand the system-call impact of your application.

2 - Avoid exponential behavior, even when individual costs are cheap. Every Julia import results in checking the freshness of that module, and then all of its dependencies recursively, and so leaf modules get visited over and over again. The Julia compiler needs to memoize those visits!